Machine Learning Services and File System Interaction

- Posted in:

- R

- SQL Server

Using the R language to read & write to the Windows file system has generally been a great experience. There are numerous packages and functions that have made it (dare I say) easy. That experience can be a bit more challenging when invoking R code from SQL Server's Machine Learning Services.

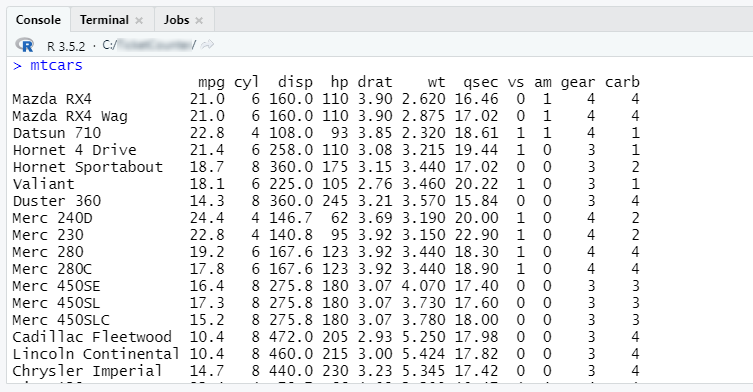

Let's look at some examples using RStudio and the mtcars data frame, a built-in set of automotive data:

We can write the data to a *.csv file with one line of code, and read the data back from the *.csv file with one line of code:

write.csv(mtcars, file = "D:/ETL/carsData.csv") read.csv(file = "D:/ETL/carsData.csv")

, read.csv().png)

Now let's try reading the data from file in SQL Server via sp_execute_external_script:

--Read CSV file from disk. EXEC sp_execute_external_script @language = N'R', @script = N' x<-read.csv(file = "D:/ETL/carsData.csv") ', @output_data_1_name = N'x';; GO Msg 39004, Level 16, State 20, Line 231 A 'R' script error occurred during execution of 'sp_execute_external_script' with HRESULT 0x80004004. Msg 39019, Level 16, State 2, Line 231 An external script error occurred: Error in file(file, "rt") : cannot open the connection Calls: source ... withVisible -> eval -> eval -> read.csv -> read.table -> file In addition: Warning message: In file(file, "rt") : cannot open file 'D:/ETL/carsData.csv': Permission denied Error in execution. Check the output for more information. Error in eval(ei, envir) : Error in execution. Check the output for more information. Calls: runScriptFile -> source -> withVisible -> eval -> eval -> .Call Execution halted

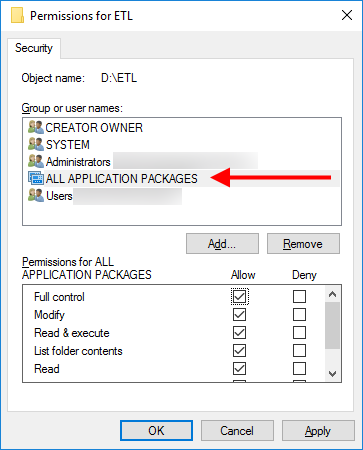

Within that rather long error message is cannot open file 'D:/ETL/carsData.csv': Permission denied. For SQL Server 2017 you needed to give Read & execute and/or Write permissions to the NT Service\MSSQLLaunchpad service user account for scripts that need access to various directories. But that step did not fix my error this time. Of course now I'm on SQL Server 2019. So did something change between versions? In a word, yes. The docs for SQL Server tell us the isolation mechanism for external processes changed starting with SQL Server 2019. In addition to the NT Service\MSSQLLaunchpad service user account, permissions must be granted to ALL APPLICATION PACKAGES.

Once appropriate permissions were in place, I was able to proceed. (Note: the documentation indicates permissions are needed by both accounts, but I only needed them for ALL APPLICATION PACKAGES.) Hats off to Microsoft for their documentation--all the info I needed is there and it's well presented. I had a hard time finding it though. I was probably not searching for the right phrase or key words. I eventually stumbled upon a forum where someone had a similar problem with sp_execute_external_script, but for a network share.

Working Directory

The documentation tells us "By default, external Python and R scripts only have read access permission to their working directories." We can use R function getwd() to return the path of the working directory:

EXEC sp_execute_external_script @language = N'R', @script = N' x<-as.data.frame(getwd()) ', @output_data_1_name = N'x'; C:/ProgramData/SQL2019/Temp-R/Appcontainer1/D245BCED-2927-4616-B613-34084356328C

From my testing, it appears I can both read and write to the working directory:

EXEC sp_execute_external_script @language = N'R', @script = N' filename <- paste(getwd(), "mtcars.csv", sep = "/") write.csv(mtcars, file = filename) x<-as.data.frame(filename) ', @output_data_1_name = N'x'; C:/ProgramData/SQL2019/Temp-R/Appcontainer1/AF8DE0C7-DAB9-4DD3-B17D-7D71E02A3E4C/mtcars.csv

tempdir()

Another option for working with the file system is to use the per-session temporary directory, which (for Windows users) should be found in an environment variable (TMPDIR, TMP and TEMP). R function tempdir() returns a path that is a subdirectory of the per-session temporary directory (although it looks like more than just one subdirectory to my eye):

EXEC sp_execute_external_script @language = N'R', @script = N' x<-as.data.frame(tempdir()) ', @output_data_1_name = N'x'; C:\ProgramData\SQL2019\Temp-R\Appcontainer1\086E9291-E39B-4833-9479-F44F034BCFE8\Packages\c588c7e9867dd90ade627be0ad34ea5d-appcontainer1\AC\Temp\RtmpKSje60

Wait A Minute

A question that hasn't been posed yet is "Should I be creating files in the first place?" It's a fair question. Historically, there has been general guidance that you don't use SQL Server for interacting with the file system (see xp_cmdshell warnings, among others). You'd use something external to SQL Server instead, and invoke it from SQL Server Agent or elsewhere (an executable file, a PowerShell script, an SSIS package, etc.). Machine Learning Services certainly is different than the database engine, but I still think of it as part of SQL Server as a whole. And of course there's the fact that you invoke R scripts via T-SQL. I do feel some hesitancy to create files on the C drive where the operating system normally lives. As a best practice, we don't put database files on C (neither system nor user databases). It can be argued other SQL Server-related processes shouldn't write to C either. I'll let you be the judge.

Comments