Schema-on-Read for SQL Server?

- Posted in:

- Big Data

- Development

- JSON

- NoSQL

- T-SQL

I've grumbled that SQL Server doesn't natively provide a way to infer JSON schema in a query. It was pointed out to me that it's "schema on read or schema on write" when dealing with JSON data in SQL Server. I'd never heard that phrase before, and it certainly spelled things out in a clear and concise way.

Nonetheless, I had also concluded dynamic T-SQL could be used for the OPENJSON function...and that it would be icky. And here we are. Yes, I went down that path. And yes, it was sorta icky. Let's take a look.

In my estimation, I would need to use one of two options along with the OPENJSON function:

- Explicitly specify schema using the WITH clause to format the output.

- Define schema in the SELECT list.

Here are examples of each:

DECLARE @JsonData NVARCHAR(MAX) = '[

{

"ContactTypeID": 1,

"Name": "Accounting Manager",

"ModifiedDate": "2002-06-01T00:00:00"

},

{

"ContactTypeID": 2,

"Name": "Assistant Sales Agent",

"ModifiedDate": "2002-06-01T00:00:00"

},

{

"ContactTypeID": 3,

"Name": "Assistant Sales Representative",

"ModifiedDate": "2002-13-01T00:00:00"

}

]'

--Option 1

SELECT *

FROM OPENJSON(@JsonData)

WITH (

ContactTypeID INT,

Name NVARCHAR(50),

ModifiedDate DATETIME

)

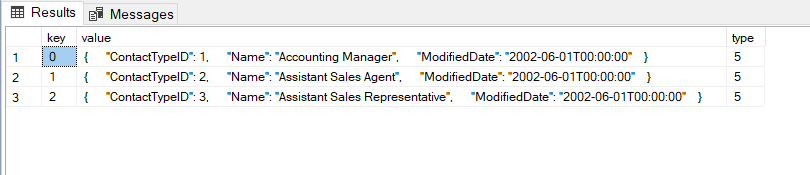

--Option 2

SELECT

TRY_CAST(JSON_VALUE(j.value, '$.ContactTypeID') AS INT) AS ContactTypeID,

TRY_CAST(JSON_VALUE(j.value, '$.Name') AS NVARCHAR(50)) AS Name,

TRY_CAST(JSON_VALUE(j.value, '$.ModifiedDate') AS DATETIME) AS ModifiedDate

FROM OPENJSON(@JsonData) j

Option #1 results in an error due to the invalid date (highlighted):

Msg 242, Level 16, State 3, Line 19

The conversion of a nvarchar data type to a datetime data type resulted in an out-of-range value.

On the other hand, Option #2 is more forgiving. The use of TRY_CAST does not cause any errors when invalid data is encountered (NULL is returned instead), and all data "rows" are in the result set.

OpenJsonTabular

The code for my stored procedure is below. It has a single input parameter for a string of JSON data. The name/value pairs of the first "row" of JSON data is parsed to obtain column names and types for the result set. A query string for the OPENJSON function is constructed and executed via EXEC. JSON's support for data types is pretty sparse compared to SQL Server (notice the big CASE expression where I attempt to do some mapping). If you're using SQL 2016, you'll have to make an edit for the STRING_AGG function.

USE tempdb;

GO

CREATE OR ALTER PROCEDURE dbo.OpenJsonTabular

@Json NVARCHAR(MAX)

AS

/*

Dynamically returns a resultset from the input JSON data.

The JSON data is assumed to be tabular/flat, with schema

inferred from the first "row" of JSON key/value pairs.

The JSON data is assumed to be in the format produced by the

FOR JSON clause with the AUTO option:

[

{ "Row1Column1Name": "Row1Column1Value", "Row1Column2Name": "Row1Column2Value", ... "Row1Column(n)Name": "Row1Column(n)Value" },

{ "Row2Column1Name": "Row2Column1Value", "Row2Column2Name": "Row2Column2Value", ... "Row2Column(n)Name": "Row2Column(n)Value" },

...

{ "Row(n)Column1Name": "Row(n)Column1Value", "Row(n)Column2Name": "Row(n)Column2Value", ... "Row(n)Column(n)Name": "RowColumn(n)Value" },

]

*/

BEGIN

DECLARE @Tsql NVARCHAR(MAX) = CHAR(9);

SELECT @Tsql = @Tsql + STRING_AGG(

'TRY_CAST(JSON_VALUE(j.value, ''$."' + CAST(k.[key] AS VARCHAR(MAX)) + '"'') AS ' +

--Try to map the JSON type to a SQL Server type.

CASE

--JSON null

WHEN k.type = 0 THEN 'VARCHAR(MAX)'

--JSON string (double-quoted Unicode with backslash escaping)

WHEN k.type = 1 THEN

CASE

WHEN TRY_CAST(k.[value] AS DATETIME) IS NOT NULL THEN 'DATETIME'

ELSE 'VARCHAR(MAX)'

END

----JSON number (double- precision floating-point format in JavaScript)

WHEN k.type = 2 THEN

CASE

WHEN k.[value] LIKE '%.%' THEN 'NUMERIC(38, 5)'

WHEN k.[value] LIKE '%,%' THEN 'NUMERIC(38, 5)'

ELSE 'BIGINT'

END

--JSON boolean ("true" or "false")

WHEN k.type = 3 THEN 'BIT'

--JSON array (ordered sequence of values)

WHEN k.type = 4 THEN 'VARCHAR(MAX)'

--JSON object (an unordered collection of key:value pairs)

WHEN k.type = 5 THEN 'VARCHAR(MAX)'

ELSE 'VARCHAR(MAX)' --null

END + ') AS ' + QUOTENAME(k.[key]), ', ' + CHAR(13) + CHAR(10) + CHAR(9) )

FROM OPENJSON(@Json) j

CROSS APPLY OPENJSON(j.value) k

WHERE j.[key] = 0

SELECT @Tsql = 'SELECT ' + CHAR(13) + CHAR(10) +

@Tsql + CHAR(13) + CHAR(10) +

'FROM OPENJSON(''' + @Json + ''') j';

--SELECT @Tsql;

EXEC (@Tsql);

END

Examples

Here are a couple of code samples that show how it works.

DECLARE @Json NVARCHAR(MAX) =

(

SELECT TOP(5) *

FROM AdventureWorks.Production.ProductListPriceHistory

FOR JSON AUTO

);

EXEC tempdb.dbo.OpenJsonTabular @Json;

SET @Json =

(

SELECT TOP(5) *

FROM msdb.sys.objects

FOR JSON AUTO

)

EXEC tempdb.dbo.OpenJsonTabular @Json;

As you may have expected, the result sets from each execution are different:

I don't know if any of this could be considered "schema on read". Maybe it's automated schema on read. Or maybe it's just an abomination. It may be icky, but I still had fun working on it. Converting relational data to JSON and then back to a tabular result set isn't terribly useful, of course. But I have at least one use case that involves serializing external data to JSON and then "querying" it with the OpenJsonTabular procedure. Perhaps more on this in another post.

Comments